Using Omni’s AI Assistant on the Semantic Layer

Can modern data tools’ AI assistant replace analysts for self-serve or is there still some way to go?

This is part three of a three-part series on using AI in data engineering. In Part 1, we looked at how you can use AI in data modelling. In Part 2, we added tests and monitors using AI, and in this part, we build a self-serve AI semantics layer using Omni’ AI assistant.

In this post, we’ll build a self-serve AI and analytics layer we can use in Omni, a BI tool. It’s long been a dream for data teams to hand end-users the key to the castle and let them loose on self-serving their own requests. It’s sounded good on paper so far, but the results have often been so-so, looking good in demos but not so much in practice.

With the latest tools, and using Omni’s AI Assistant, there’s a real shot at making something that’s actually useful. To set expectations, it will require guardianship over the data, only exposing specific tables (organised in “Topics”) and fields we have 100% trust in.

Plan for this section

Set the data up for AI in Omni’s semantic layer

Provide AI context about our dataset

Using the self-serve AI

Testing limitations of the self-serve AI

Here’s what we’ll end up with: A self-serve interface available to everyone in the company, being able to juggle between classic BI and asking the data questions.

What it looks like when the going is good.

Setting our data up for AI

First things first. Let’s get our transformed data marts and metrics ready to be consumed by Omni’s AI Assistant.

Omni’s AI Assistant works off ‘Topics.’ In Omni, a Topic is a curated dataset that organizes and structures data around a specific area of interest or analysis. You can explore Topics much as you’d explore other datasets. They’ve just been joined and prepared and may have additional metadata such as field and topic descriptions.

In our case, our Topic is based off of our house_statistics data mart.

We can drag and drop dimensions, pivot the data and visualize Topics in charts, just as you’d expect from any BI tool.

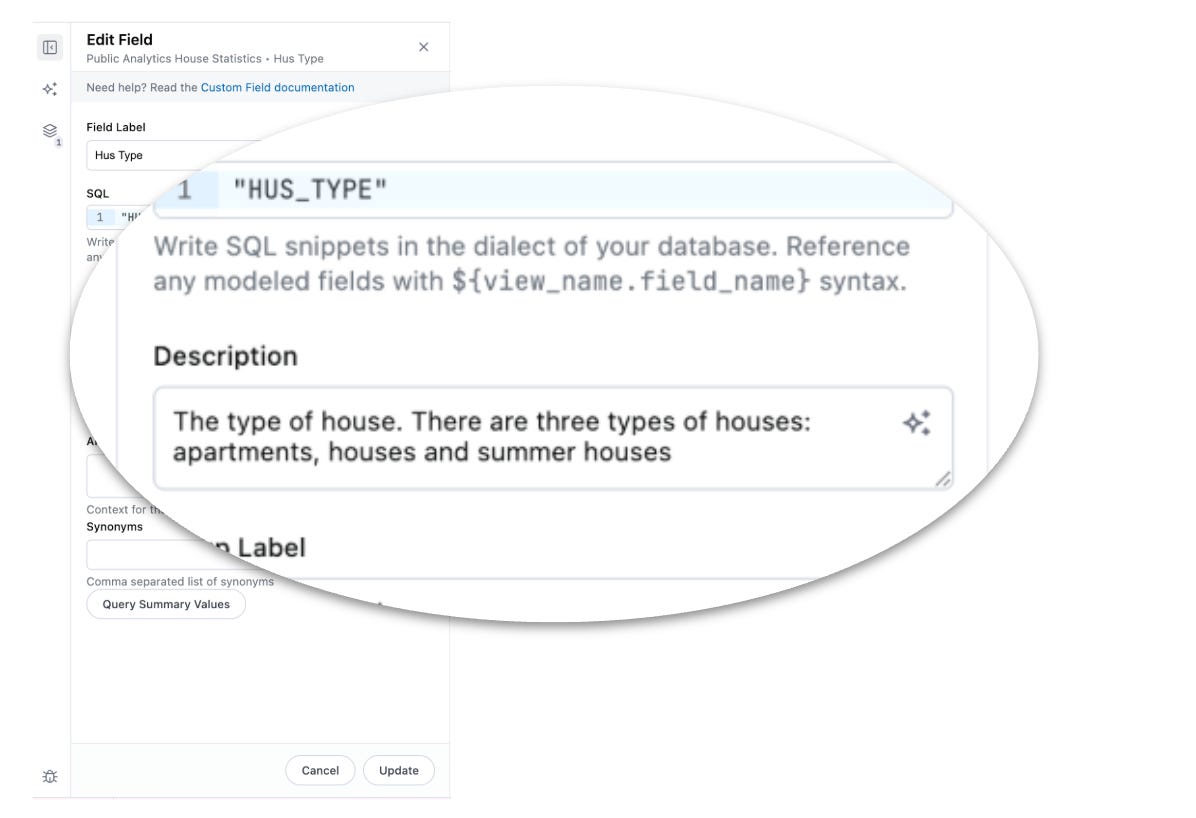

The more context we can add, the better the AI Assistant will be. In this case, we go in and add specific descriptions to each field in the Topic.

So far so good, we now have a well-documented Topic ready to query.

Topics can also be provided a specific AI context parameter for the model to keep in mind when accessing the data. Using context windows allows AI models to understand and incorporate relevant information. In our case, for example, we specify to only respond with data that’s available and give details about the raw source of the data.

ai_context: |-

This topic focuses on the sale prices of square meter houses. The main concepts are hus type (house type) and postnummer (post code).

Only respond with accurate answers based on the data that you're aware of.

Don't pivot unless there is more than one dimension included in the query.

Typical questions will be focused on square meter price over time, percentage developments, and comparison of house types and post codes.

- If asked about square meter price, always use the Kvdrm Pris field

- If asked about dates, always use the Kvartal (quarter) column

- If asked about the origins of the data, refer to the FAQs: https://finansdanmark.dk/tal-og-data/boligstatistik/boligmarkedsstatistikken/spoergsmaal-og-svar-om-boligmarkedsstatistikken/Omni’s AI Assistant is now available for everyone to use, with a few key setups that make it good to go:

Only data from the relevant Topic is included to avoid going outside the context

Specific descriptions have been added to each field and to the dataset

Using AI context, we’ve informed the LLM about guidance on how it should be used

What it looks like to end users. Ask any questions about the dataset we provided and get human-understandable answers in seconds.

The verdict: What works and what doesn’t

So far so good, but do we now have the one-fit-all answer to the never-ending stream of tedious data requests? I’m not quite sure.

As soon as you stretch it a bit, you end up with funky answers. In the video below are a few examples.

When asked to show apartment prices over time, the time aggregation seems off and the visualization looks odd

When asked about the most expensive area, it doesn’t make it clear which house types it’s about or what the time frame is

When asked for the time frame, it provides a non-conclusive answer

There are plenty of examples like this, where it’s still easier to jump to the actual BI editor or ask an analyst for help.

That said, will we get there soon? Probably. I think part of it is tooling maturity, but the other part is data curation.

Before opening the gates to the AI Assistant across the company, I’d consider the following:

Limit datasets to only a few, highly curated ones

Have specific use cases in mind (e.g., salespeople asking questions about their client performance) and stress test these through user tests with end-users to see if the AI consistently delivers

Measure some % acceptance or positive response rate to understand if you’re satisfied

Iteratively go back and adjust the AI context and metadata descriptions to err on the side of it saying “I don’t know” instead of making unreasonable assumptions

We now have a curated AI-ready semantic layer, built for both classic BI and AI-powered exploration (with some limitations!) 🎉

Here’s a recap of what we’ve done in Part 3:

Created a curated Topic in Omni with selected fields we trust

Added field descriptions and metadata to improve AI understanding

Defined AI context guidance to steer the model’s responses

Enabled a self-serve AI Assistant for everyday data questions

Tested common use cases and spotted where AI still falls short

That concludes this series. Check out the other parts here. Part 1: Using AI for Data Modeling in dbt and Part 2: Using AI to build a robust testing framework.