How should analysts spend their time

How to do more of the work that matters and less of the work that don’t

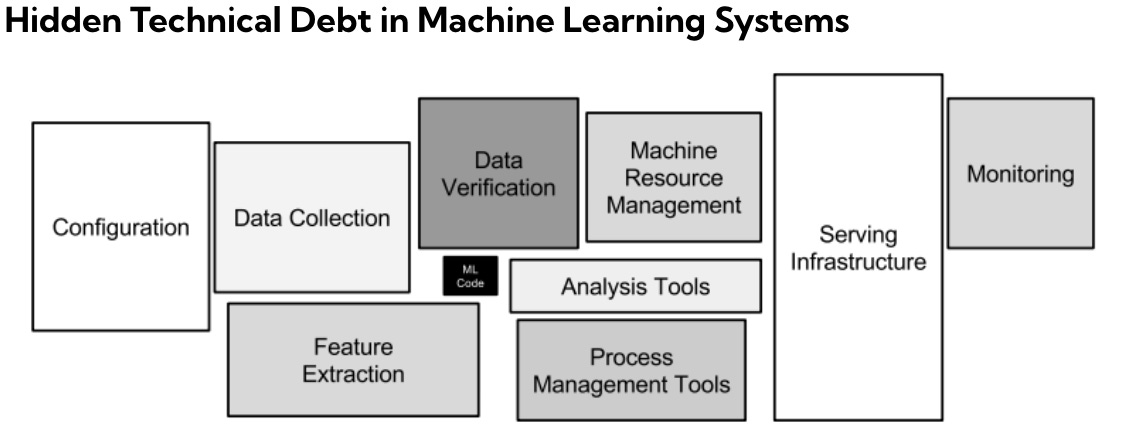

When I was at Google and started building machine learning models, one of the first things I was shown was the image below. It highlighted that writing the actual machine learning code is only a fraction of the total work I’d be doing. This turned out to be true.

But working in data is much more than just machine learning so what does this chart look like for a data analyst?

The answer is not a simple one. There will be times where it makes sense to focus on building dashboards and underlying data models and other times where it makes more sense to focus on analysis and insights.

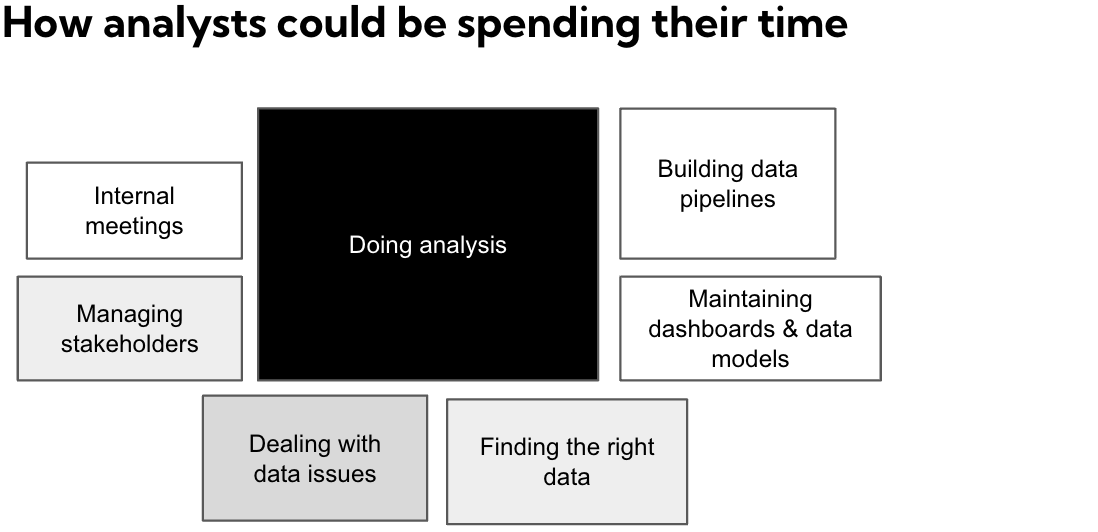

With that in mind, this is my assessment of how an average week looks like in the life of an analyst.

Just as with machine learning there are many different tasks that are just as valuable as doing analysis.

However, one common topic is that data people spend upwards 50% of their time working reactively, often dealing with data issues or trying to find or get access to data. Examples of this are:

A stakeholder mentions that a KPI in a dashboard looks different to what it did last week and you have to answer why this is

A data test in dbt is failing and you have to understand what’s the root cause of the issue

You want to use a data point for a new customer segment and there are five different definitions when you search in Looker and it’s unclear which one to use

Allocating time differently

Here’s an alternative for how data analysts could be spending their time

So, what’s different?

More time for analysis

Analysts should do… wait for it… analysis. They should have the freedom to get an idea on the way in to work and have it answered by lunch time. This type of work often requires long stretches of focused time, having the right tools in place and an acceptance that it's okay to spend half a day on work that may go nowhere.

I’ve seen this done particularly well when data analysts work closely with other people such as user research on quickly formulating hypotheses for which A/B tests to run well knowing that not all of them will be a home run.

“Taking that extra time to making sure you’re doing the right work instead of doing the work right is often some of time best spent”

Less time spent dealing with data issues

Data teams I’ve spoken to spend upwards 20% of their day on dealing with data issues and it gets more painful as you scale. When you are a small data team you can look at a few data models to get to the root cause. As you scale you start having dozens of other data people relying on your data models, engineering systems breaking without you being notified and code changes that you had no idea about impacting your data models.

Less time finding what data to use

The difficulty of finding the right data to use increases exponentially with data team size as well. When you’re a small team you know where everything is. As you get larger it becomes increasingly difficult and you often run into issues around having multiple definitions of the same metric. As you get really large just getting access to the right data can in the worse instances take months.

In search for the optimal time allocation

The optimal allocation is different for everyone, but my guess is that you’ll have room for improvements. The most important first step is to be deliberate about how you spend your time.

“I’d recommend setting aside a few minutes each week to look back at the past week and note down how you spent your time. If you do this regularly you’ll get a surprisingly good understanding of whether you spend your time right”

Finding the right data

Data catalogues are great in theory but they often fail to live up to their potential. Instead, they end up as an afterthought and something not too dissimilar from the broken window theory starts to happen. As soon as people stop maintaining a few data points in the data catalogue you may as well throw the whole thing out the window. Luckily, things are moving in the right direction and many people are thinking about how we get the definitions and metadata to live closer to the tools people already use everyday.

Reduce time spent dealing with data issues

The last few years data teams have gotten larger and much more data is being created. Dealing with data issues was easy when you were a five person data team with daily stand-ups. It’s not so straightforward when there’re dozens of data people all creating different data left and right.

We need to be able to apply some of what made life easy when you were a smaller data team to larger data teams. Some ideas of how this could be done:

Every data asset should have an owner

Encapsulate data assets into domains with public and private access endpoints so not all data is accessible to everyone

Close the loop to data producers with shared ownership so issues are caught as far upstream as possible

Enable everyone to debug data issues so they don’t have to be escalated to the same few “data heroes“ each time

“Instead of treating every data issue as an ad-hoc fire, invest in fundamental data quality and controls that make data issues less likely to reoccur”

If you have some ideas of how to improve where you spend your time in a data team let me know!

I don't know if you've seen this before, but GitLab's Data Team (and the company in general) maintains a public handbook (https://about.gitlab.com/handbook/business-technology/data-team/). Based on my reading of that resource, it seems like GitLab applies some of the principles you mention. For instance, "Every data asset should have an owner" and "Close the loop to data producers with shared ownership so issues are caught as far upstream as possible" seem to be in line with their platform page that lists out their data sources (https://about.gitlab.com/handbook/business-technology/data-team/platform/#data-sources) as well as their definition of "tiers" (https://about.gitlab.com/handbook/business-technology/data-team/platform/#tier-definition).

Your quote “Taking that extra time to making sure you’re doing the right work instead of doing the work right is often some of time best spent” is great. People in any domain should ask themselves whether they're working on the right stuff all the time.

Another great post Mikkel. What makes you lose time on getting access to data?