Data tests and the broken windows theory

Data tests are wonderful but hard to get right and creates a lot of overhead work for data teams

The broken windows theory can be traced back to criminology and suggests that if you leave a window broken in a compound everything else starts to fall apart. If residents start seeing that things are falling apart they stop caring about other things as well.

We can draw the same analogy to the world of data and data tests in particular.

Data tests are wonderful. They let you set expectations for your data and you can deploy hundreds of small robots that watch out for anything going wrong.

I have no doubt that data tests are here to stay. Once you’ve been notified of a breaking change you would have otherwise never caught, it’s hard to imagine a future without them.

Despite all their promise, data tests too often end up not living up to their full potential.

The problems with tests can be grouped into three areas

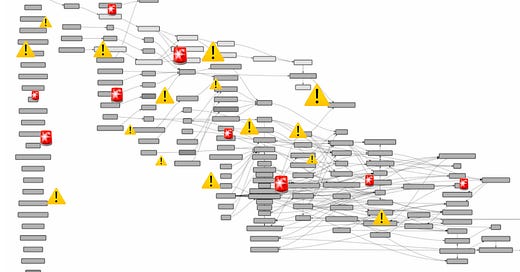

There are too many alerts from test failures causing people to not pay attention to them

The signal to noise ratio is too low and data teams waste time on false positive test failures

Data teams don’t implement tests in the right places and data issues are caught by end-users

Let’s dig into each one

Overload of test failures

Large data data teams (50+) with thousands of data models are most often dabbling with this. They have dozens or even hundreds of test failures in a given day and knowing which ones to pay attention to is no easy task.

This is the data team's version of the broken windows theory.

It’s hard to know who is responsible for which test failure. Sometimes people who have left the company end up getting tagged as responsible in your Slack monitoring channel. Data floaters can no longer manage and route test failures across dozens of data people working across many business domains.

This results in important test failures that you should absolutely have paid attention to, going unnoticed as they drown in the pool of less important test failures.

Low signal to noise

Investigating a test failure can be a lot of work. You have to understand upstream models, understand who changed what in the codebase and at times investigate upstream data sources owned by engineering teams.

Too often test errors are not representing actual errors in the data. For example, they may be due to a delayed pipeline and by the time you get to the root cause of the test failure it may have just worked itself out.

This leads to a lot of frustration and causes data teams to ignore tests but also gives them less incentive to write tests in the first place.

Tests not implemented in the right places

Data issues caught by end-users directly in dashboards are the bane of the existence of any data team. It happens frequently that issues that the data team did not anticipate creeps in and goes undetected by the data team until a stakeholder starts questioning a dashboard. In the worst case they have already made decisions based on inaccurate data.

With many data teams approaching hundreds or thousands of data models it’s difficult to know where to put the right tests and how to keep them relevant.

What to do about it?

We need a mental model shift where we think about having published a data model as being accompanied by a tax. Depending on the complexity of the data model that tax may be anywhere from 2% to 20% of your time which you should expect to maintain the data model and keep an eye out for test failures and other things going wrong. This naturally limits how many data models each person can be expected to own. Machine learning engineers have operated in this way for some time. They know that when they ship a new machine learning model they’ve committed themselves to ongoing work around monitoring quality metrics, drift and uptime.

With that being said, this is where I would focus to get better at data tests.

Have a clear idea about which tests are important. For example by having a clear definition of data model criticality that you implement in dbt or in your data catalogue. This gives you a tool to know what you should absolutely pay attention to and what you can ignore for a little while.

Get really good at ownership. If you have all test failures in one Slack channel or unclear expectations about who owns what you’ll run into trouble. Instead, the right test failures should get in front of the right person at the right time. Floater duties are a means to an end and we should get better at getting more precise with which test warnings are brought to who.

Have good test hygiene. Have clear rules about what test coverage is expected for different types of models based on how important they are. Have rules on how you avoid broken windows. For example, set aside a Friday each month where you sort your tests by those that have been failing for the longest and make it a goal to clean them up so no test has been failing for more than five consecutive days. Make a rule that every time your stakeholders spot a data issue that wasn’t caught by a test, you implement a new test so you’ll catch it proactively the next time.

What the future holds

Data tests are here to stay but we’ve only scratched the surface of their full potential. Whether the future means automated tests popularised by data observability tools such as Soda or Monte Carlo, having a data catalogue that becomes the de facto for defining ownership or the metric layer bringing everything together I think we will see a lot of innovation here.

If you have any takes on how do get better at data tests let me know!

We can learn a lot from manufacturing industry. Data is the “material” used to “manufacture” products in tech companies. I would love to discuss some ideas from the aerospace industry that could be extended to data quality.

Cheers

Alex from multilayer.io

Great article - I agree with the premise about alert fatigue completely. I get where you were going with the broken windows analogy but you might get pushback from people since there’s a negative connotation associated with trying to apply it in real life in New York for example